Keil, Mark; Li, Lei; Mathiassen, Lars; Zheng, Guangzhi: The influence of checklists and roles on software practitioner risk perception and decision-making; in: Journal of Systems and Software, Vol. 81 (2008), No. 6, pp. 908-919.

http://dx.doi.org/10.1016/j.jss.2007.07.035

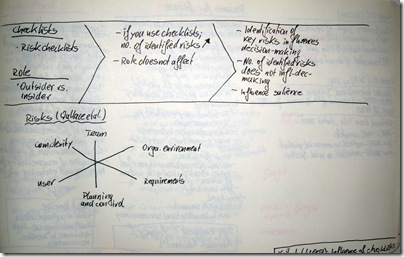

In this paper the authors present the results of 128 role plays they conducted with software practitioners. These role plays analysed the influence of checklists on the risk perception and decision-making. The authors also controlled for the role of the participant, whether he/she was an insider = project manager or an outsider = consultant. They found the role having no effect on the risks identified.

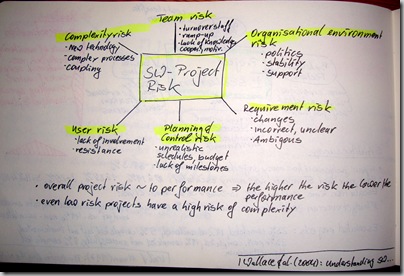

Keil et al. created a risk checklist based on the software risk model which was first conceptualised by Wallace et al. This model distinguishes 6 different risks – (1) Team, (2) Organisational environment, (3) Requirements, (4) Planning and control, (5) User, (6) Complexity.

In their role plays the authors found that checklists have a significant influence on the number of risks identified. However the number of risks does not influence the decision-making. Decision-making is influenced by the fact whether the participants have identified some key risks or not. Therefore the risk checklists can influence the salience of the risks, i.e., whether they are perceived or not, but it does not influence the decision-making.