Bourgault, Mario; Drouin, Nathalie; Hamel, Émilie: Decision Making Within Distributed Project Teams – An Exploration of Formalization and Autonomy as Determinants of Success; in: Project Management Journal, Vol. 39 (2008), Supplement, pp. S97S110.

DOI: 10.1002/pmj.20063

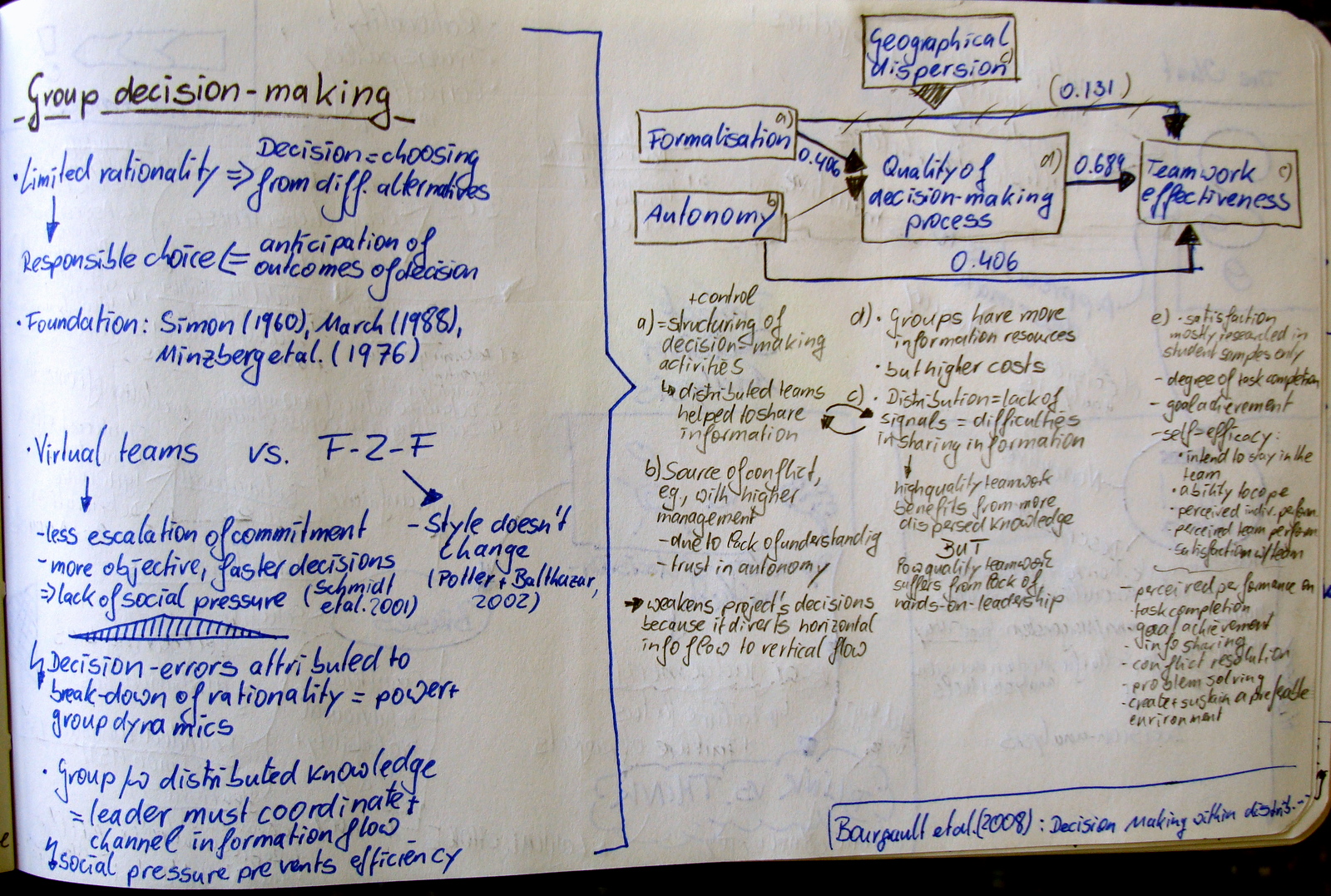

Bourgault et al. analyse group decision making in virtual teams. Their article is based on the principles of limited rationality, i.e. deciding is choosing from different alternatives, and responsible choice, i.e. deciding is anticipating outcomes of the decision.

Existing literature controversially discusses the effects of virtualising teams. Some authors argue that virtual teams lack social pressure and thus smaler likelihood of showing escalation of committment behaviour, whilst making more objective and faster decisions. Other authors find no difference in working style between virtual and non-virtual teams. Generally literature explains that decision-errors are mostly attributed to break-downs in rationality, which are caused by power and group dynamics. Social pressure in groups also prevents efficiency. In any team with distributed knowledge the leader must coordinate and channel the information flow.

Bourgault et al. conceptualise that Formalisation and Autonomy impact the quality of decision-making, which then influences the team work effectiveness. All this is moderated by the geographic dispersion of the team.

They argue that formalisation, which structures and controls the decision making activities, helps distributed teams to share information. Autonomy is a source of conflict, for example with higher management due to a lack of understanding and trust, ultimately it weakesn a project decision-making because it diverts horizontal information flow within the team to vertical information flow between project and management.

Quality of decision-making process – the authors argue that groups have more information resources and therefore can make better decisions, but this comes at an increased cost for decision-making. Geographical distributed teams lack signals and have difficulties in sharing information. Thus high quality teamwork benefits from more dispersed knowledge but low quality teamwork suffers from a lack of hands-on leadership.

Teamwork effectiveness – this construct has mostly been measured using satisfaction measurements and student samples. Other measures are the degree of taks completion, goal achievement, self-efficacy (intent to stay on the team, ability to cope, percieved individual performance, perceived team performance, satisfaction with the team). Bourgault et al. measure teamwork effectiveness asking for the perceived performance on taks completion, goal achievement, information sharing, conflict resolution, problem solving, and creating a prefereable and sustainable environment.

The authors‘ quantitative analysis shows that in moderated teams all direct and indirect effects can be substantiated, with exception of the autonomy influencing the quality of decision-making. Similarily in highly dispersed teams all direct and indirect effects, but the direct influence of formalisation on teamwork effectiveness, could be proven.

Bourgault et al. conclude with three points of recommendation for the praxis – (1) Distribution of a team contributes to high quality of decisions, although it seems to come at a high cost. (2) Autonomous teams achieve better decisions – „despite the fear of an out of sight out of control syndrome“. (3) Formalisation adds value to teamwork especially the more distributed the team is.