Everyone, please vote which approach you think is right. Let me outline that for you.

Yesterday I read the Black Swan by Nassim Nicholas Taleb. It is a great book. In short it is about our (as in the human mankind) inability to predict rare events. He details a lot of psychological reasons, e.g., tunneling, narrative fallacy; for us not being able to predict these Black Swans and he also shows what we can do about it. Great book – highly recommended. Anyway, yesterday I was reading page 159 (for the ones who have a copy handy); and there he makes the hypothetical argument that we think about project deadlines as if they were probabilistically the same as our life expectancy – partly because that’s how we evolved. So what do you think – is that really true? But let’s understand that distinction in detail first.

1) Living People

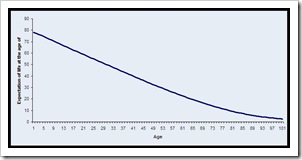

Life expectancy figures are the very centre of an actuary’s daily life, at least the ones who insure health and life &c. When you meet one at a party you’ll understand how exciting this topic can be. What life expectancy figures do is to look at the dying age distribution with in a population; ages are ordered neatly by age and then the actuaries compute the probability of dying before your next birthday. That also gives you then an expected age which is the year by which 50% of your fellow birthday-boys and girls will be dead. The expected age is per definition an average.

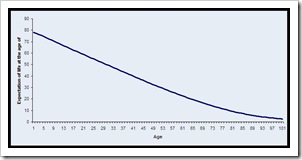

If you look up the tables and they chart quite nicely as well (cf. the graph below) you’ll see that in 2004 in the US the expected age for a newborn is 77.8 years. Some of them die (a saddening 680 that is) before they reach their first birthday. So if you made it there then you can expect to live for another 77.4 years which allows you to expect your death shortly after your 78th birthday. When you turn 30 then you can expect to live for 49.3 more years (or 79.3 in total), when you reach 50 you could expect another 30.9 years (80.9 in total) and so on.

Source: CDC Life table for the total population: United States, 2004

What if project delays would be like a living population?

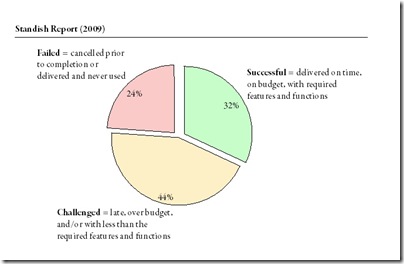

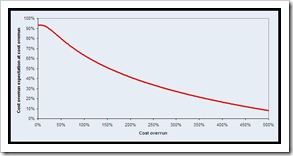

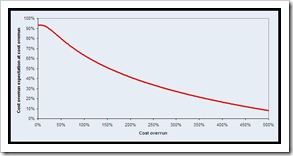

In the book Taleb argues that we typically think of project delays having the same probabilistic properties as life expectancy curves. That is on average all projects are delayed by 3 weeks, and when a project is delayed by 2 weeks it will be delayed by an additional 1.5 weeks and so on. Since I don’t have nice raw data and my own data pool is not yet big enough for nice analysis like that, once again I plundered the cost overrun figures from the Standish Group report. When computed and charted it looks like this:

What does it say? Well, when the project is still immaculate with 0% cost overrun, you would expect it to overrun its budget by +98%. If your project already shows a budget overrun of 100% it will need another +63% (so in total it will be +163%), if it has overrun its budget by +300% you would put aside another +27% (totaling it at +327%) and so on.

2) The Black Swan

So what are these Black Swans all about? An excerpt from the McKinsey Quarterly (No. 1, 2009) where the author summarises his central thesis beautifully (cf. the full article here):

Before Europeans discovered Australia, we had no reason to believe that swans could be any other color but white. But they discovered Australia, saw black swans, and revised their beliefs. My idea in The Black Swan is to make people think of the unknown and of the potency of the unknown, particularly a certain class of events that you can’t imagine but can cost you a lot: rare but high-impact events.

So my black swan doesn’t have feathers. My black swan is an event with three properties. Number one, its probability is low, based on past knowledge. Two, although its probability is low, when it happens it has a massive impact. And three, people don’t see it coming before the fact, but after the fact, everybody saw it coming. So it’s prospectively unpredictable but retrospectively predictable.

Now that we’re in this financial crisis, for example, everybody saw it coming. But did they own bank stocks? Yes, they did. In other words, they say that they saw it coming because they had some thoughts in the shower about this possibility—not because they truly took measures to protect themselves from it.

Now, a black swan can be a negative event like a banking crisis. It also can be positive: inventing new technology, making new discoveries, meeting your mate, writing a best seller, or developing a cure for cancer, baldness, or bad breath. In The Black Swan, I say that in the historical and socioeconomic domain, black swans are everything. If you ignore black swans, you’ve got nothing. And I showed that the computer, the Internet, and the laser—three recent technological black swans—came out of nowhere. We didn’t know what they were, and when we had them right before our eyes we didn’t know what to do with them. The Internet was not built as something to help people communicate in chat rooms; it was a military application and it evolved.

So these things have a life of their own. You cannot predict a black swan. We also have some psychological blindness to black swans. We don’t understand them, because, genetically, we did not evolve in an environment where there were a lot of black swans. It’s not part of our intuition.

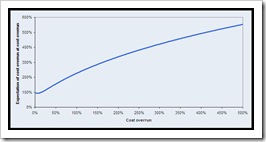

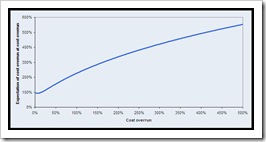

In the book The Black Swan he argues on page 159 that when we make predictions of project schedules we tend to make them without looking for external events. In his example of a publishing deadline – it may be the sick grandmother, sudden financial troubles which force the author to take on a night shift job, or a terrorist attack that troubles your mind for some months. These things happen, yet we never acknowledge them in the first place. So he argues a project that is late by 3 months should be expected to be late by another 5 weeks, if it then is not ready after 5 months you would expect another 6 weeks, at a year delay you would rather expect it to be delayed another 5 years than expecting it to be ready within the next 2 weeks – he argues that in reality the marginal expected project delay increases and does not decrease.

So, if we go ahead and compute the same Standish Figures with these probabilistic assumptions then we get the following picture:

So what do these numbers tell us? Well, if the project is in budget, we better expect +98% budget overrun. If it however is +100% over budget you better expect +226% more (that is a +326% total budget overrun); and when you get there at +326% you would expect +441% additional costs adding up to a whooping +767%. You get the idea.

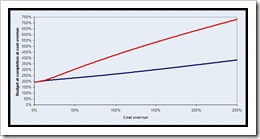

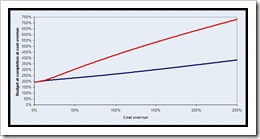

So if we chart that as "expectation of total budget at completion at cost overrun of"-diagram the curves look like that:

3) Your turn – the vote

What do you think is true for Projects – do cost overruns of projects show probabilistic features of living people or are they Black Swans?

Thanks.