This is a gem.

Craig Brown from the ‚Better Projects‘-Blog (here) created a presentation on Jurgen Appelo’s Definite List of Project Management Methodologies. Jurgen published his list first in his blog over at noop.nl and now moved it into a Google Knol here. Craig put it into a great tongue in cheek presentation. I very much enjoyed it, as such here it is:

Archive for Januar, 2009

The Definite List of Project Management Methodologies

Freitag, Januar 16th, 2009Understanding software project risk – a cluster analysis (Wallace et al., 2004)

Mittwoch, Januar 14th, 2009Wallace, Linda; Keil, Mark; Rai, Arun: Understanding software project risk – a cluster analysis; in: Information & Management, Vol. 42 (2004), pp. 115–125.

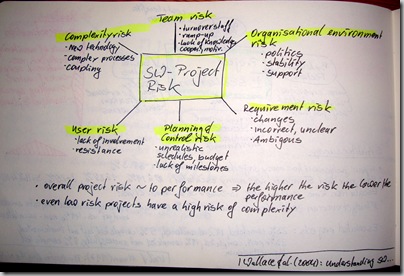

Wallace et al. conducted a survey among 507 software project managers worldwide. They tested a vast set of risks and tried to group these risks into 3 clusters of projects: high, medium, and low risk projects.

The authors assumed 6 dimensions of software project risks –

- Team risk – turnover of staff, ramp-up time; lack of knowledge, cooperation, and motivation

- Organisational environment risk – politics, stability of organisation, management support

- Requirement risk – changes in requirements, incorrect and unclear requirements, and ambiguity

- Planning and control risk – unrealistic budgets, schedules; lack of visible milestones

- User risk – lack of user involvement, resistance by users

- Complexity risk – new technology, automating complex processes, tight coupling

Wallace et al. showed two interesting findings. Firstly, the overall project risk is directly correlated to the project performance – the higher the risk the lower the performance! Secondly, they found that even low risk projects have a high complexity risk.

Understanding the Nature and Extent of IS Project Escalation (Keil & Mann, 1997)

Dienstag, Januar 13th, 2009Keil, Mark; Mann, Joan: Understanding the Nature and Extent of IS Project Escalation – Results from a Survey of IS Audit and Control Professionals; in: Proceedings of The Thirtieth Annual Hawaii International Conference on System Sciences, 1997.

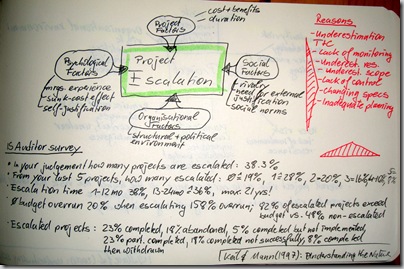

"Many runaway IS projects represent what can be described as continued commitment to a failing course of action, or "escalation" as it is called in management literature." Keil & Mann argue that escalation of projects can be explained with four different factors – (1) project factors, (2) social factors, (3) psychological factors, and (4) organisational factors.

- Project Factors – cost & benefits, duration of the project

- Social Factors – rivalry between projects, need for external justification, social norms

- Organisational Factors – structural and political environment of the project

- Psychological Factors – managers previous experience, sunk-cost effects, self-justification

In 1995 Keil & Mann conducted a survey among IS-Auditors, among their most interesting findings are

- 38.3% of all SW development projects show some level of escalation (Original question: ‚In your judgement, how many projects are escalated?‘).

- When asked ‚From your last 5 projects how many escalated?‘ 19% of the auditors said none, 28% said 1, 20% said 2, 16% said 3, 10% said 4 and 8% said all 5.

- Escalation of schedule 38% of projects 1-12 months, 36% 13-24 months, maximum in the sample was 21 years.

- Average budget overrun of projects was 20%, when projects escalate average budget overrun is 158%.

- 82% of all escalated projects run over their budget, whilst only 48% of all non-escalated projects run over their budget

- Success rate of escalated projects is devastating – of all escalated projects 23% were completed successfully, 18% were abandoned, 5% were completed but never implemented, 23% were partially completed, 18% were unsuccessfully completed, and 8% were completed and then withdrawn.

Furthermore, Keil & Mann test for the reasons for escalation behaviour, based on their 4 factor concept. They found the main reasons for project escalations were

- Underestimation of time to completion

- Lack of monitoring

- Underestimation of resources

- Underestimation of scope

- Lack of control

- Changing specifications

- Inadequate planning

Why Software Projects Escalate – An Empirical Analysis and Test of Four Theoretical Models (Keil et al., 2000)

Montag, Januar 12th, 2009Keil, Mark; Mann, Joan; Rai, Arun: Why Software Projects Escalate – An Empirical Analysis and Test of Four Theoretical Models; in: MIS Quarterly, Vol. 24 (2000), No. 4, pp. 631-664.

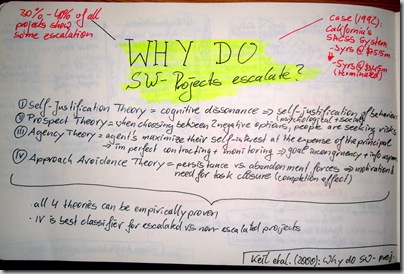

The authors describe that: "Software projects can often spiral out of control to become ‚runaway systems’…". Keil et al. define escalation behaviour as constantly adding resources to the project. Thus escalated projects typically overrun their schedule and budget. The authors describe the case of the Statewide Automated Child Support System (SACSS) of California’s Dept. of Social Services. The project was started in 1992 with a projected budget of USD 75.5m and a go-live date in 1995. The project escalated and did cost an estimated USD 345m and was finally terminated in 1997 without any deliverables in place.

Based on a large scale survey of IS-Auditors Keil et al. found that 30-40% of all software development projects show some degree of escalation. Then the authors analyse four different theoretical models how to explain escalation – (1) Self-Justification Theory, (2) Prospect Theory, (3) Agency Theory, (4) Approach Avoidance Theory.

Self-Justification Theory – SJT is grounded in Feistinger’s cognitive dissonance, most easily self-justification can be characterised as a retrospectively rationalising behaviour which is found to violate internal or external beliefs, attitudes, or norms. As the wikipedia article on SJT describes it self-justification typically manifests in two forms internal SJT or external SJT. Internal SJT strategies are changing the violated attitude, downplaying or denying the negative consequences; whilst external SJT strategies are all sorts of external excuses from bad luck, to lack of competencies. Keil et al. argue that two effects are relevant for escalation behaviour – social and psychological self-justification. Whilst psychological self-justification is a strategy to overcome dissonance, social pressures increase the need for self-justification, e.g., saving your face.

Prospect Theory – Kahneman & Tversky’s Prospect Theory and their Cumulative Prospect Theory describes decision-making under uncertainty and risk. Keil et al. argue that Prospect Theory explains escalation behaviour, because the theory postulates that when choosing between two adverse events, deciders are seeking greater risks. Commonly this is also referred to as ’sunk cost effect‘.

Agency Theory – Jensen & Meckling’s concept of principal-agent relationships covers many examples of principals delegating decision competencies or execution of tasks to agents. A principal-agent relationships turns sour (aka principal-agent problem) when goal incongruence and information asymmetry create a constellation of imperfect contracting and monitoring. In general terms – the agents maximise their self-interest at the expense of the principal. Simplest real-world example – the software integrator who you hired to do most of your work never wants your project to finish.

Approach Avoidance Theory – Rubin & Brockner’s concept of approach avoidance which is described that every approach vs. avoidance decisions is driven by the iconic little angel vs. little devil. In the case of escalated projects, these forces either encourage persistence or abandonment of the project. Three factors explain why projects are not terminated – (1) size of reward for goal attainment, (2) withdrawal costs, and (3) goal proximity. Keil et al. argue that especially the third factor ‚goal proximity‘ creates a completion effect which is explained by the need for task closure. They argue that this is a better conceptualisation of escalation symptoms than the sunk cost effect, aka throw good money after bad money. They describe that completion effects pull an individual towards the goal whilst sunk cost effects push an individual further. A beautiful real life example is the 90%-completion syndrome:

This syndrome refers to the tendency for estimates of work completed to increase steadily until a plateau of 90% is

reached. Thereafter, programmer estimates of the fraction of work completed increase very slowly. In some cases, inaccurate estimation leads to situations in which software projects are reported to be 90% complete for half of the entire duration of the project, an obvious impossibility (Brooks 1975).

Keil et al. test 6 constructs taken from these 4 theories which were previously connected to escalation behaviour – psychological self-justification, social self-justification, sunk cost effect, goal incongruence, information asymmetry, and completion effect.

With a series of pairwise logistic regression models between the groups of escalated vs. non-escalated projects – all 6 constructs and therefore all 4 theories can empirically be proven. However the best classifier for escalation vs. non-escalation is the completion effect.

Institutional reform (Stone, 2008)

Freitag, Januar 9th, 2009Stone, Alastair: Institutional reform – A decision-making process view; in: Research in Transportation Economics, Vol. 22 (2008), No. 1, pp. 164-178.

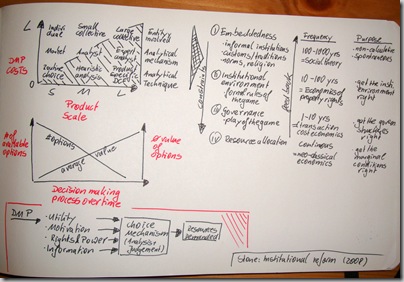

Stone analyses the fundamentals of the DMP (decision-making process) and proposes a model which he then applied to the example of urban land passenger transport. This summary focuses on the analysis of the DMP. Firstly, Stone draws a model which combines DMP costs with product scale and assumes a positive correlation between these two = the larger the product the higher the DMP costs. He outlines the entity involved, analytical mechanisms, and analytical techniques, e.g., for large-scale products the entity is usually a large collective, an expert analyst is the mechanism of choice for analysis, and a product specific discounted cash flow is the technique for the analysis. Secondly, Stone shows that over time in the DMP the number of options decreases, whilst the average value of each option increases.

More interestingly he looks at different decisions and identifies 4 levels of societal decision processes

- Embeddedness = informal institutions, customs and traditions, norms and religion (happens every 100 to 1.000 years; is explained by social theory; and the purpose of it is non-calculative and spontaneous)

- Institutional environment = formal rules of the game (happens every 10 to 100 years; is explained by economics of property rights; and the purpose of it is to get the institutional environment right)

- Governance = play of the game (happens every 1 to 10 years; is explained by transaction cost economics; and the purpose of it is to get the governance structures right)

- Resource allocations (happen continuously; is explained by neo-classical economics; and have the purpose of getting the marginal conditions right)

Higher level impose constraints on lower level decisions, whilst these return feedback to the top. Finally, he outlines the DMP:

Input Variables (utilities, motivation, rights & power, and information) go into the Choice Mechanism (analysis & judgement) and result in a Resource Demand.

Decision-Making for New Technology – A Multi-Actor, Multi-Objective Method (Cunningham & Lei, 2007)

Freitag, Januar 9th, 2009Cunningham, Scott W.; van der Lei, Telli E.: Decision-Making for New Technology: A Multi-Actor, Multi-Objective Method; in: Management of Engineering and Technology, 2007, No. 5-9, pp. 1176-1185.

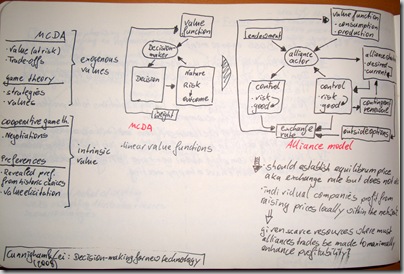

Cunningham & Lei present a method that does not aggregate the individuals‘ preferences but instead considers strategic and economic factors in the assessment of multi-criteria decision making (MCDA).

They explicitly model exogenous and intrinsic values into their criteria. The exogenous values are based on MCDA (value at risk & trade-offs), and game theory (strategies & values). The intrinsic values are based on cooperative game theory (negotiations), and preferences (revealed preferences from historic choices & value elicitation).

Using weighted linear value functions they model the system of a decision-maker on new technology. Then the authors expand their system model to include alliances and outside options. Their results show are somehow unexpected, the system does not agree on an equilibrium price (aka exchange rate), because individual companies in the alliance profit from raising prices locally within the network. Thus they ask: "Given scarce resources, where must alliances trades be made to maximally enhance profitability?"

Uncertainty Sensitivity Planning (Davis, 2003)

Donnerstag, Januar 8th, 2009Davis, Paul K.: Uncertainty Sensitivity Planning; in: Johnson, Stuart; Libicki, Martin; Treverton, Gregory F. (Eds.): New Challenges – New Tools for Defense Decision Making, 2003, pp. 131-155; ISBN 0-8330-3289-5.

Who is better than planning for very complex environments than the military? On projects we set-up war rooms, we draw mind maps which look like tactical attack plans, and sometimes we use a very militaristic language. So what’s more obvious than a short Internet search on planning and military.

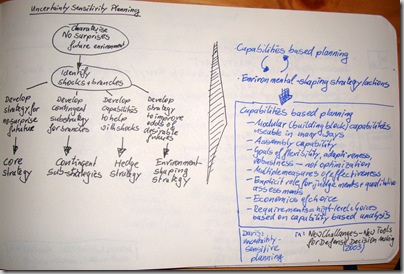

Davis describes a new planning method – Uncertainty Sensitivity Planning. Traditional planning characterises a no surprises future environment – much like the planning we usually do. The next step is to identify shocks and branches. Thus creating four different strategies

- Core Strategy = Develop a strategy for no-surprises future

- Contingent Sub-Strategies = Develop contingent sub-strategies for all branches of the project

- Hedge Strategy = Develop capabilities to help with shocks

- Environmental Shaping Strategy = Develop strategy to improve odds of desirable futures

Uncertainty Sensitivity Planning combines capabilities based planning with environmental shaping strategy and actions.

Capabilities based planning plans along modular capabilities, i.e., building blocks which are usable in many different ways. On top of that an assembly capability to combine the building blocks needs to be planned for. The goal of planning is to create flexibility, adaptiveness, and robustness – it is not optimisation. Thus multiple measurements of effectiveness exist.

During planning there needs to be an explicit role for judgements and qualitative assessments. Economics of choice are explicitly accounted for.

Lastly, planning requirements are reflected in high-level choices, which are based on capability based analysis.

Application of Multicriteria Decision Analysis in Environmental Decision Making (Kiker et al., 2005)

Donnerstag, Januar 8th, 2009Kiker, Gregory A.; Bridges, Todd S.; Varghese, Arun; Seager, Thomas P.; Linkov, Igor: Application of Multicriteria Decision Analysis in Environmental Decision Making; in: Integrated Environmental Assessment and Management, Vol. 1 (2005), No. 2, pp. 95-108.

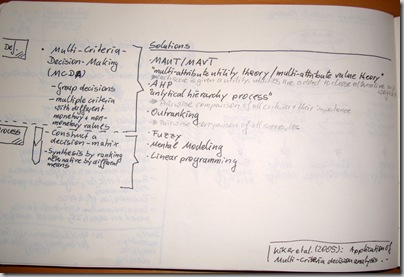

Kiker et al. review Multi-Criteria Decision-Making (MCDA). The authors define MCDA as decisions, typically group decisions, with multiple criteria with different monetary and non-monetary values. The MCDA process follows two steps – (1) construct a decision-matrix, (2) synthesis by ranking alternative by different means.

What are solutions/methods to apply MCDA in practice?

- MAUT & MAVT

multi-attribute utility theory & multi-attribute value theory

= each score is given a utility, then utilities are weighted and summed up to choose an alternative - AHP

analytical hierarchy process

= pairwise comparison of all criteria to determine their importance - Outranking

= pairwise comparison of all scenarios - Fuzzy

- Mental Modelling

- Linear Programming

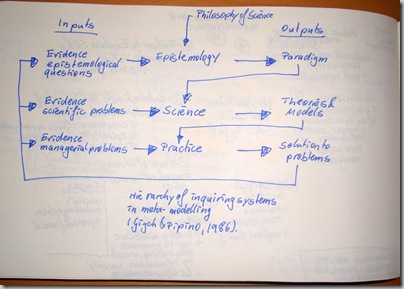

Hierarchy of inquiring systems in meta-modelling (Gigch & Pipino, 1986)

Mittwoch, Januar 7th, 2009This concludes our little journey into constructivism, complex system thinking, and the big question: "What do we really really really know?"

| Inputs | Philosophy of Science | Outputs | ||

| Evidence, epistemological questions | —> | Epistemology | —> | Paradigm |

| Evidence, scientific problems | —> | Science | —> | Theories & Models |

| Evidence, managerial problems | —> | Practice | —> | Solution to problems |

System of Systems (Flood & Jackson, 1991) Decision making process (Simon, 1976)

Mittwoch, Januar 7th, 2009Not really a summary of two article, but rather a summary of two constructivists‘ concepts.

Firstly, Flood and Jackson propose a System of Systems and point out the modelling approaches suitable for these specific systems:

| Unitary | Pluralist | Coercive | |

| Simple | Operation Research, Systems Analysis, Systems Engineering, System Dynamics | Social Systems Design, Strategic Assumption Surfacing and Testing | Critical Systems Heuristics |

| Complex | Viable Systems Model, General Systems Theory, Socio-Technical Systems Thinking, Contingency Theory | Interactive Planning, Soft Systems Methodology |

Secondly, because at some point in time I just had to write it down again, Simon’s constructivist process of decision-making, originally published in 1979:

Intelligence (Is vs. Ought situation) —> Design (Problem Solving) —> Choice —> Implementation —> Evaluation

With the extension of decision loops if no choice can be made as proposed by Le Moigne, revisiting the Design, Intelligence, or even the Initial step:

- Re-Design – the How

- Re-Finalisation – the What

- Re-Justification – the Why

A Principal Exposition of Jean-Louis Le Moigne’s Systemic Theory (Eriksson, 1997)

Dienstag, Januar 6th, 2009Eriksson, Darek: A Principal Exposition of Jean-Louis Le Moignes Systemic Theory; in: „Cybernetics and Human Knowing“. Vol. 4 (1997), No. 2-3.

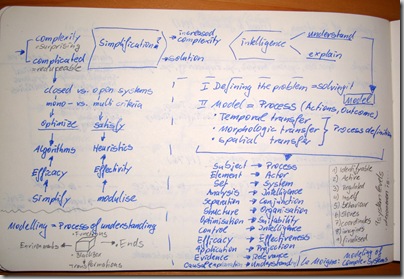

When thinking about complexity and systems one sooner or later comes across Le Moigne. Departing point is the dilemma of simplification vs. intelligence. Therefore systems have to be distinguished to be either complicated = that is they are reducible, or to be complex = show surprising behaviour.

Complicated vs. Complex

This distinction follows the same lines as closed vs. open systems, and mono- vs. multi-criteria optimisation. Closed/mono-criteria/complicated systems can be optimised using algorithms, simplifying the system, and evaluating the solution by its efficacy. On the other hand, open/multi-criteria/complex systems can only be satisfied by using heuristics, breaking down the system into modules, and evaluating the solution by its effectivity.

In the case of complex systems simplification only increases the complexity of the problem and will not yield a solution to the problem. Instead of simplification intelligence is needed to understand and explain the system, in other words it needs to be modelled. As Einstein already put it – defining the problem is solving it.

Secondly, to model a complex system is to model a process of actions and outcomes. The process definition consists of three transfer functions – (1) temporal, (2) morphologic, and (3) spatial transfer. In order to make the step from modelling complicated system to modelling complex systems some paradigms need to change:

- Subject –> Process

- Elements –> Actors

- Set –> Systems

- Analysis –> Intelligence

- Separation –> Conjunction

- Structure –> Organisation

- Optimisation –> Suitability

- Control –> Intelligence

- Efficacy –> Effectiveness

- Application –> Projection

- Evidence –> Relevance

- Causal explanation –> Understanding

The model itself follows a black box approach. For each black box, its function, its ends = objective, its environment, and its transformations need to be modelled. Furthermore the modelling itself understands and explains a system on nine different levels. A phenomena is

- Identifiable

- Active

- Regulated

- Itself

- Behaviour

- Stores

- Coordinates

- Imagines

- Finalised

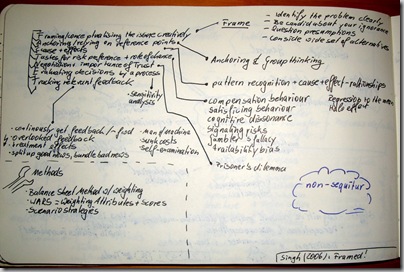

Framed! (Singh, 2006)

Dienstag, Januar 6th, 2009Singh, Hari: Framed!; HRD Press, 2006, ISBN: 0874258731

Amazon-Link

I stumbled upon this book somewhere in the tubes. I do admit that I felt appealed to combine a fictional narrative with some scientific subtext. Unfortunately for this book I put the bar to pass at Tom DeMarco’s Deadline. On the one hand Singh delivers, what seems to be his own lecture on decision-making as the alter-ego of Professor Armstrong; on the other hand the fictional two-level story of Larry the first person story-teller and the crime mystery around Laura’s suicide turn murder does not really deliver. Let alone the superficial references to Chicago, which I rather found off putting, I think a bit more of research and getting off the beaten track could have done much good here. Lastly, I don’t fancy much the narrative framework driven style so commonly found in American self-help books – and so brilliantly mocked in Little Miss Sunshine.

Anyhow let’s focus on the content. Singh calls his structure for better decision-making FACTNET

- Framing/ conceptualising the issue creatively

- Anchoring/ relying on reference points

- Cause & effect

- Tastes for risk preference & role of chance

- Negotiation & importance of trust

- Evaluating decisions by a process

- Tracking relevant feedback

Frame – Identify the problem clearly, be candid about your ignorance, question presumptions, consider a wide set of alternatives

Anchoring – Anchor your evaluations with external reference points and avoid group thinking

Cause & effect – Recognise patterns and cause-effect-relationships, try to regress to the mean, be aware of biases such as the halo effect

Tastes for risk & Role of chance – be aware of compensation behaviour, satisficing behaviour, cognitive dissonance, signaling of risks, gambler’s fallacy, availability bias – all deceptions which negatively impact decision-making

Negotiation & Trust – just two words: Prisoner’s dilemma

Evaluating decisions by a process – Revisit decisions, conduct sensitivity analyses

Tracking relevant feedback – Continuously get feedback & feed-forward, be aware of overlooked feedback, treatment of effects, split up good news and bundle bad news, think about sunk costs, man & machine, and engage in self-examination

Three methods for decision-making are presented in the book – (1) balance sheet methods with applied weighting, (2) WARS = weighting attributes and scores, and (3) scenario strategies.

Lastly, Singh reminded me again of the old motto „Non Sequitur!“ – making me aware of all the logic fallacies that occur if something sounds reasonable but ‚does not really follow‘.

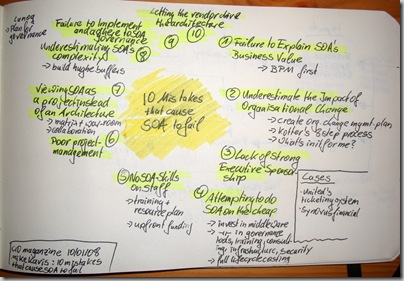

10 Mistakes that Cause SOA to Fail (Kavis, 2008)

Dienstag, Januar 6th, 2009Kavis, Mike: 10 Mistakes that Cause SOA to Fail; in: CIO Magazine, 01. October 2008.

I usually don’t care much about these industry journals. But since they arrive for free in my mail every other week, I could help but noticing this article, which gave a brief overview of two SOA cases – United’s new ticketing system and Synovus financial banking system replacement.

However, the ten mistakes cited are all too familiar:

- Fail to explain SOA’s business value – put BPM first, then the IT implementation

- Underestimate the impact of organisational change – create change management plans, follow Kotter’s eight step process, answer everyone the question ‚What’s in it for me?‘

- Lack strong executive sponsorship

- Attempt to do SOA on the cheap – invest in middleware, invest in governance, tools, training, consultants, infrastructure, security

- Lack SOA skills in staff – train, plan the resources, secure up-front funding

- Manage the project poorly

- View SOA as a project instead of an architecture – create your matrices, war-room; engage collaboration

- Underestimate SOAs complexity

- Fail to implement and adhere to SOA governance

- Let the vendor drive the architecture